Online lecture: AI Responsibility Gaps

AI Accountability Dialogue Series

On 12 February 2026, we are organising the opening online lecture of the AI Accountability Dialogue Series, focusing on the timely topic of “responsibility gaps” in artificial intelligence systems. Our guest speakers will be Daniela Vacek and Jaroslav Kopčan.

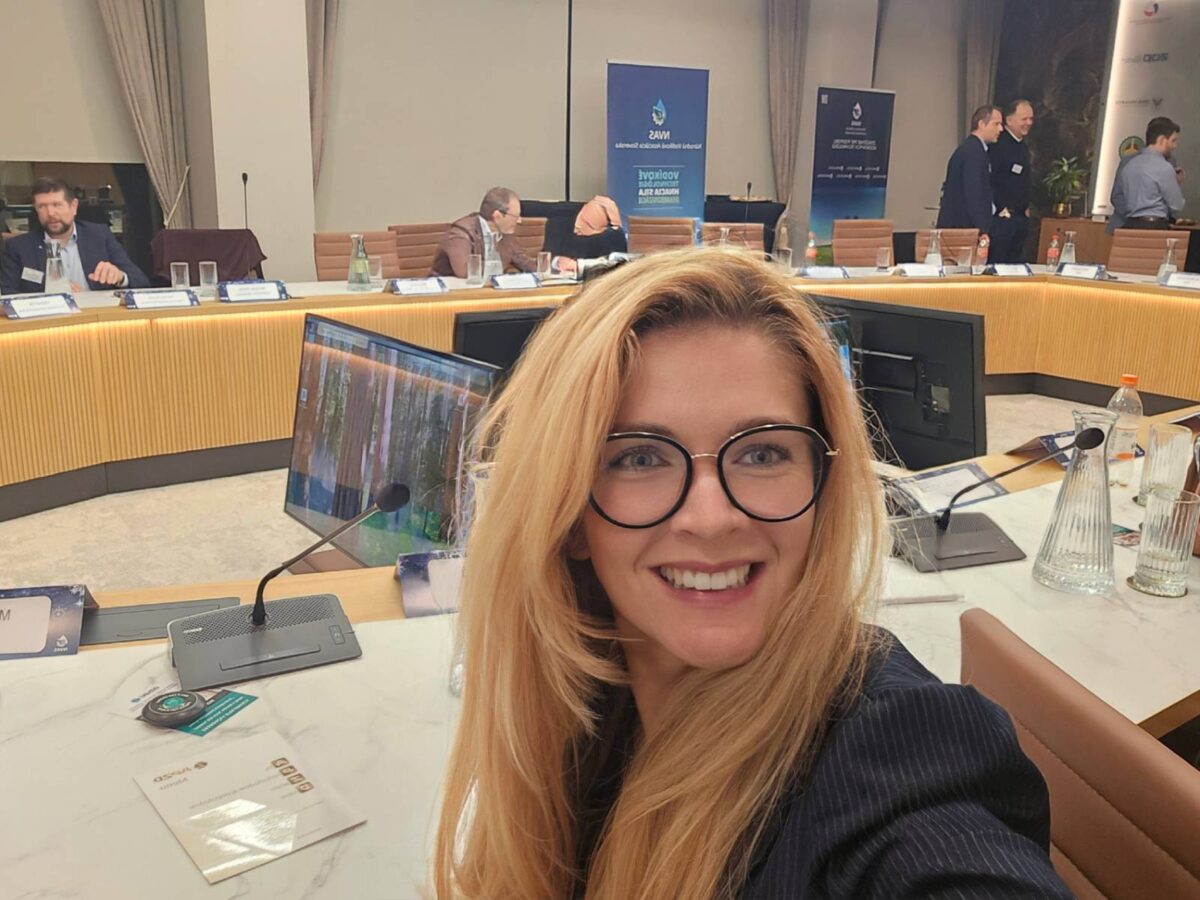

Daniela Vacek works at the Institute of Philosophy of the Slovak Academy of Sciences (SAS), the Kempelen Institute of Intelligent Technologies (KinIT), and the Faculty of Arts of Comenius University in Bratislava. She is a laureate of the ESET Science Award 2025 in the category Outstanding Young Scientist in Slovakia under 35. She is a Slovak philosopher specializing in the ethics of artificial intelligence, responsibility, analytic aesthetics, and philosophical logic. She leads an APVV-funded project entitled Philosophical and Methodological Challenges of Intelligent Technologies (TECHNE).

Jaroslav Kopčan works as a research engineer at the Kempelen Institute of Intelligent Technologies (KinIT), where he specializes in natural language processing (NLP) and explainable artificial intelligence (XAI). His research focuses on automated content analysis and explainability techniques for underrepresented languages. He works on the development of interpretable NLP systems and tools, with an emphasis on knowledge distillation.

Date and Time: Thursday, 12 February 2026 | 10:00 CET

Venue: Online | Free participation

The lecture will be conducted in English.

There is an extensive debate on responsibility gaps in artificial intelligence. These gaps correspond to situations of normative misalignment: someone ought to be responsible for what has occurred, yet no one actually is. They are traditionally considered to be rooted in a lack of adequate knowledge of how an artificial intelligence system arrived at its output, as well as in a lack of control over that output. Although many individuals involved in the development, production, deployment, and use of an AI system possess some degree of knowledge and control, none of them has the level of knowledge and control required to bear responsibility for the system’s good or bad outputs. To what extent is this lack of knowledge and control at the level of outputs present in contemporary AI systems?

From a technical perspective, relevant knowledge and control are often limited to the general properties of artificial intelligence systems rather than to specific outputs. Actors typically understand the system’s design, training processes, and overall patterns of behaviour, and they can influence system behaviour through design choices, training methods, and deployment constraints. However, they often lack insight into how a particular output is produced in a specific case and do not have reliable means of intervention at that level.

The lecture will offer several insights into these questions. In addition, we will show that the picture is even more complex. There are different forms of responsibility, each associated with distinct conditions that must be met. Accordingly, some forms of responsibility remain unproblematic even in the case of AI system outputs, while others prove to be more challenging.

BeeGFS in Practice — Parallel File Systems for HPC, AI and Data-Intensive Workloads 6 Feb - This webinar introduces BeeGFS, a leading parallel file system designed to support demanding HPC, AI, and data-intensive workloads. Experts from ThinkParQ will explain how parallel file systems work, how BeeGFS is architected, and how it is used in practice across academic, research, and industrial environments.

BeeGFS in Practice — Parallel File Systems for HPC, AI and Data-Intensive Workloads 6 Feb - This webinar introduces BeeGFS, a leading parallel file system designed to support demanding HPC, AI, and data-intensive workloads. Experts from ThinkParQ will explain how parallel file systems work, how BeeGFS is architected, and how it is used in practice across academic, research, and industrial environments. When a production line knows what will happen in 10 minutes 5 Feb - Every disruption on a production line creates stress. Machines stop, people wait, production slows down, and decisions must be made under pressure. In the food industry—especially in the production of filled pasta products, where the process follows a strictly sequential set of technological steps—one unexpected issue at the end of the line can bring the entire production flow to a halt. But what if the production line could warn in advance that a problem will occur in a few minutes? Or help decide, already during a shift, whether it still makes sense to plan packaging later the same day? These were exactly the questions that stood at the beginning of a research collaboration that brought together industrial data, artificial intelligence, and supercomputing power.

When a production line knows what will happen in 10 minutes 5 Feb - Every disruption on a production line creates stress. Machines stop, people wait, production slows down, and decisions must be made under pressure. In the food industry—especially in the production of filled pasta products, where the process follows a strictly sequential set of technological steps—one unexpected issue at the end of the line can bring the entire production flow to a halt. But what if the production line could warn in advance that a problem will occur in a few minutes? Or help decide, already during a shift, whether it still makes sense to plan packaging later the same day? These were exactly the questions that stood at the beginning of a research collaboration that brought together industrial data, artificial intelligence, and supercomputing power. Who Owns AI Inside an Organisation? — Operational Responsibility 5 Feb - This webinar focuses on how organisations can define clear operational responsibility and ownership of AI systems in a proportionate and workable way. Drawing on hands-on experience in data protection, AI governance, and compliance, Petra Fernandes will explore governance approaches that work in practice for both SMEs and larger organisations. The session will highlight internal processes that help organisations stay in control of their AI systems over time, without creating unnecessary administrative burden.

Who Owns AI Inside an Organisation? — Operational Responsibility 5 Feb - This webinar focuses on how organisations can define clear operational responsibility and ownership of AI systems in a proportionate and workable way. Drawing on hands-on experience in data protection, AI governance, and compliance, Petra Fernandes will explore governance approaches that work in practice for both SMEs and larger organisations. The session will highlight internal processes that help organisations stay in control of their AI systems over time, without creating unnecessary administrative burden.