NCC Slovakia on Business Mission in Portugal During the State Visit of the Slovak President

On 26–27 November 2025, the National Supercomputing Centre (NSCC Slovakia) took part in a business mission to Portugal held on the occasion of the state visit of the President of the Slovak Republic, Peter Pellegrini. Božidara Pellegrini took part in the mission on behalf of NSCC and also served as the representative of the National Competence Centre for HPC (NCC Slovakia) – the NSCC division responsible for the EuroCC project and for facilitating access of Slovak stakeholders to EuroHPC JU supercomputing resources. The delegation consisted of nearly 20 innovative Slovak companies and institutions active in the fields of digital solutions, information technologies, smart cities, and energy.

In their opening remarks, the Presidents of Portugal and Slovakia highlighted the shared commitment of both countries to innovation, education, and human capital – values that form a natural foundation for strengthening technological cooperation.

Exploring Portugal’s Innovation Ecosystem

The first day of the mission was dedicated to visiting three key innovation hubs in Lisbon. The delegation began at AI Hub by Unicorn Factory Lisboa, a centre supporting startups developing solutions in the field of artificial intelligence. This was followed by a tour of Unicorn Factory Lisboa – Beato Innovation District, one of Europe’s largest technology campuses. In the afternoon, the delegation visited Taguspark, home to more than 160 technology and research companies. These visits provided Slovak participants with valuable opportunities for networking and deepening the technological dialogue.

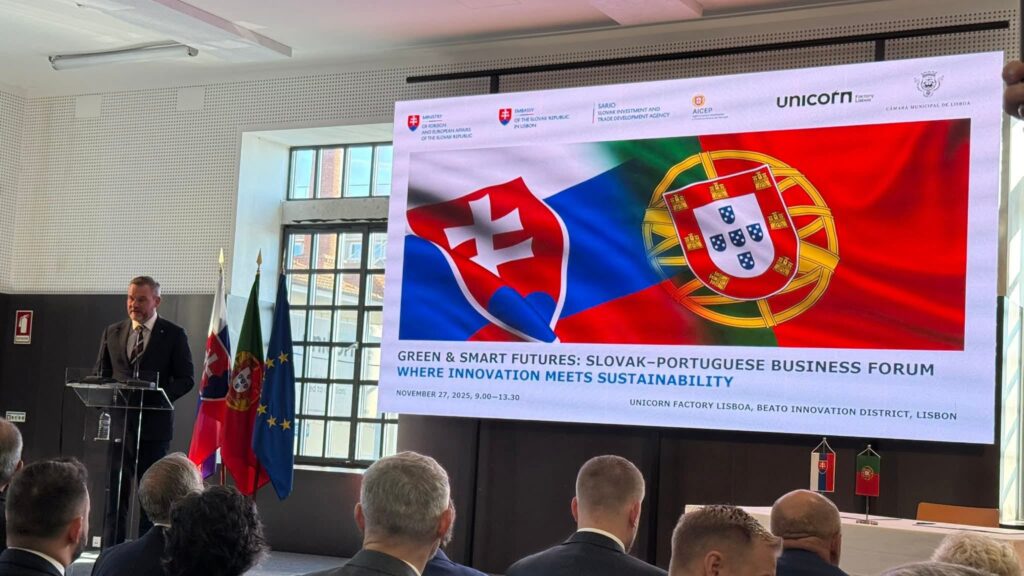

Slovak–Portuguese Business Forum

The second day centred on the Slovak–Portuguese Business Forum “Green & Smart Futures”, held at Unicorn Factory Lisboa. The forum was opened by the Presidents of both countries, who emphasised the importance of “building bridges of innovation between the Atlantic and the heart of Europe.” The programme included presentations on the investment environment, contributions from SARIO and AICEP, signing of cooperation memoranda, and, above all, intensive B2B meetings during which companies identified technological synergies and discussed potential future collaborations.

Linking the Mission to the Role of NCC Slovakia

The participation of NCC Slovakia confirmed the growing interest of Slovak companies in solutions based on artificial intelligence, simulations, and work with large datasets – areas that naturally require high-performance computing resources.

Discussions during the B2B meetings showed that an increasing number of companies encounter infrastructure limits when developing AI models or processing large-scale data. NCC Slovakia helps them identify where HPC can deliver the greatest added value and guides them in designing projects that can effectively leverage advanced computational resources.

Within the EuroCC project, NCC Slovakia also provides educational support through free courses and expert webinars, and enables Slovak companies, universities, and research organisations to gain access to European supercomputing capacities via EuroHPC JU access calls. In this way, NCC Slovakia remains a key partner for Slovak innovation and research, helping transform technological ambitions into concrete projects powered by modern HPC resources.

Urban buildings awaken: Slovak AI gives a second chance to underused spaces 4 Mar - Mestá sú živé organizmy, ktoré sa neustále menia. Mnohí z nás však v susedstve denne míňajú tiché svedectvá minulosti – prázdne školy, nevyužívané úrady či chátrajúce verejné budovy. Často si kladieme otázky: „Prečo je to zatvorené?“ „Nemohol by tu byť radšej denný stacionár, škôlka alebo kultúrne centrum?“

Urban buildings awaken: Slovak AI gives a second chance to underused spaces 4 Mar - Mestá sú živé organizmy, ktoré sa neustále menia. Mnohí z nás však v susedstve denne míňajú tiché svedectvá minulosti – prázdne školy, nevyužívané úrady či chátrajúce verejné budovy. Často si kladieme otázky: „Prečo je to zatvorené?“ „Nemohol by tu byť radšej denný stacionár, škôlka alebo kultúrne centrum?“ BeeGFS in Practice — Parallel File Systems for HPC, AI and Data-Intensive Workloads 6 Feb - This webinar introduces BeeGFS, a leading parallel file system designed to support demanding HPC, AI, and data-intensive workloads. Experts from ThinkParQ will explain how parallel file systems work, how BeeGFS is architected, and how it is used in practice across academic, research, and industrial environments.

BeeGFS in Practice — Parallel File Systems for HPC, AI and Data-Intensive Workloads 6 Feb - This webinar introduces BeeGFS, a leading parallel file system designed to support demanding HPC, AI, and data-intensive workloads. Experts from ThinkParQ will explain how parallel file systems work, how BeeGFS is architected, and how it is used in practice across academic, research, and industrial environments. When a production line knows what will happen in 10 minutes 5 Feb - Every disruption on a production line creates stress. Machines stop, people wait, production slows down, and decisions must be made under pressure. In the food industry—especially in the production of filled pasta products, where the process follows a strictly sequential set of technological steps—one unexpected issue at the end of the line can bring the entire production flow to a halt. But what if the production line could warn in advance that a problem will occur in a few minutes? Or help decide, already during a shift, whether it still makes sense to plan packaging later the same day? These were exactly the questions that stood at the beginning of a research collaboration that brought together industrial data, artificial intelligence, and supercomputing power.

When a production line knows what will happen in 10 minutes 5 Feb - Every disruption on a production line creates stress. Machines stop, people wait, production slows down, and decisions must be made under pressure. In the food industry—especially in the production of filled pasta products, where the process follows a strictly sequential set of technological steps—one unexpected issue at the end of the line can bring the entire production flow to a halt. But what if the production line could warn in advance that a problem will occur in a few minutes? Or help decide, already during a shift, whether it still makes sense to plan packaging later the same day? These were exactly the questions that stood at the beginning of a research collaboration that brought together industrial data, artificial intelligence, and supercomputing power.